5.2.2 Media Diet

Touch Designer and Media.

Touch Designer is a node based software that uses a mixture of nodes and coding to create visual outcomes. I have followed several creators on TikTok, Instagram and other social media platforms that all use touch designer. Touch Designer allows you to create audio reactive visuals by having analysing features.

Before 5.2.2, I had never used Touch Designer before so I took the time to download it and test it out for myself. At first I was vastly confused due to never using a software that was even remotely similar. To start off I watched a basic YouTube tutorial which taught the basics of each different node type and how each of the tools work.

These are some videos I like on various social media platforms and were the main inspiration behind my media diet project.

After Effects, uses displacement pro and the media is Blade Runner 2049

Touch Designer and uses hand movement to increase turbulence and colour

Touch Designer again, uses ASCII letters to create an image. Closer the hand is to the camera the larger the letters.

From user @KSLR who makes his own music and visuals using touch designer.

Music Videos

A$AP Forever - A$AP Rocky ft Moby. Directed by Dexter Navy.

A$AP Forever is one of my favourite music videos of all time. It used clean gyroscopic transitions to switch scenes and then uses a mix of clean blended transitions or with clever zoom aspects.

Born in London, England (UK) Dexter Navy is an artist, film director, and photographer. After studying in London, he went on to direct grammy-nominated music videos and developed his passion for feature films through learning the process of every aspect of his field. Navy’s aesthetic derives from an Egyptian/English heritage that taught him to combine the fields of art, craft, and design. These theories, along with contemporary culture, make up Navy’s inter-disciplinary practice today.

A$AP Forever, didn't win any major acclaim it was the lead single to A$AP Rocky's last album testing and is certified gold.

T69 Collapse - Aphex Twin. Directed by Weirdcore.

Weirdcore is a London based audio-visual artist is half English, half French and results in a director and collaborator who is one hundred percent out there.

Weirdcore has worked with the likes of M.I.A, Mos Def and Aphex twin. The Artist Nicky Smith keeps his identity hidden from the wider public. He even pixelates his own keynote speeches so people cant figure out how is voice sounds.

Throughout the 90s and mid 200s, Weirdcore burst into the scene across London's venues and squat parties and studied media communications at Leeds University.

'Weirdcore and Aphex’s inaugural show together came in March 2009 at Bloc festival in Minehead, just one month after James first made contact with Weirdcore. “I had very little time to put it all together, especially as we had a screaming seven-month-old to look after at the time,” he says. “That was pretty hard.” Over time, the Aphex Twin live show has grown. In 2017, Fuji Rock, Barcelona, Porto, Finland, Ireland and London’s Field Day festival were co-ordinates for the first Aphex Twin shows since the release of 2014’s Syro. Has Weirdcore’s work had to evolve as alongside Aphex’s? “Erm, kind of,” he replies. “But Richard likes doing his own thing, which always leaves me trying to find out what he’s going to play to save a certain type of visual for a certain track. He just never tells us, though. Well, sometimes he does, but then doesn’t play it anyway.” ' -Dazed, 2024

Fast Company did a breakdown summary of how he created the music video 'T69 Collapse'

- The video for T69 Collapse by Aphex Twin, directed by Weirdcore uses machine-learning (ML) and neural-network techniques to generate its visuals.

- Specifically, Weirdcore used a plugin called Transfusion.AI (for Adobe After Effects) to apply “style transfer” on a collage of 3D-scanned photogrammetry data (streets and buildings from Cornwall, etc.).

- The process involved combining: photogrammetry scans (i.e. real-world 3D data), overlays of text and code fragments (some resembling email-style exchanges), and style-transfer neural-network layers creating distorted, glitchy, “hallucinatory” effects.

- Visually, at certain moments (the article notes ~1:04 mark), buildings and landscapes warp: they oscillate, flicker, become abstract, as if reality itself is collapsing, matching the song’s themes.

- Weirdcore himself acknowledges that the result is only a first step he described it as “scratching the surface,” hinting at further experimentation.

Rather than using a hand animated style, 3D CGI or standard VFX. The video blends 3d scans with a Machine Learning style transfer. This results in a hybrid real-world foundation warped through a machines lens.

Weirdcore using MIDI data, and BPM changes to sync visual transformations to the music. He was one of the first artists to use a machine learning plugin within the professional world.

Touch Designer Process

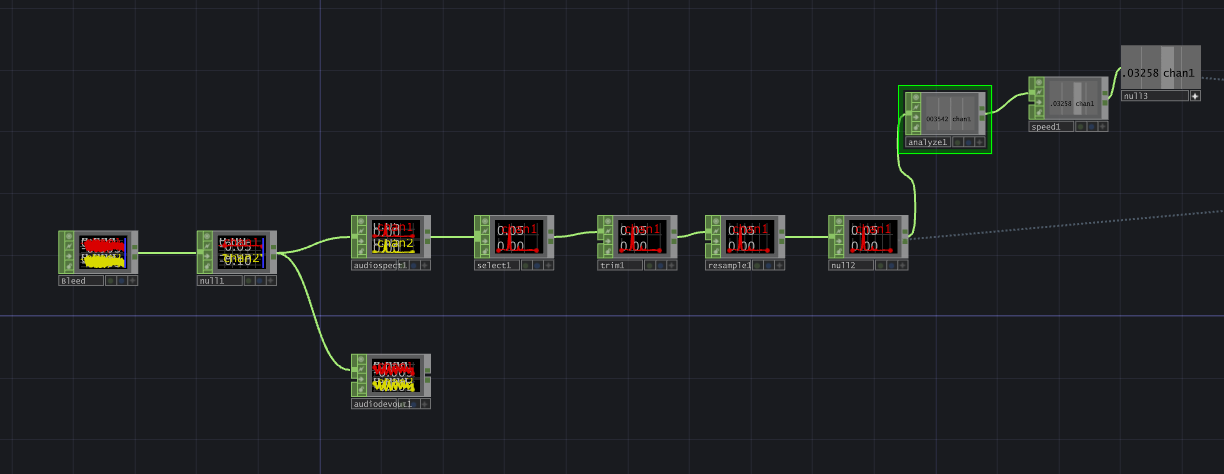

At the start of the tutorial it starts of with CHOPS (Channel Operators), these control the audio side to your design. To start with I needed a file in which allowed me to connect my chosen music file. I then needed to filter it into two separate channels. This allowed me to hear the audio and then also filter the audio.

At first the sample rate was too high so I had to lower it down to a normal level which it could process the visuals at a normal level. I then added a analyse and a speed channel. This detected the speed of the song and when the songs volume went down the speed decreased.

The next part were my TOPS (Texture Operator). These nodes allow noise and other textures to be applied to the 3d elements to your project. These changed the position of the boxes and size when reacting to the audio. This added movement to the project.

This was the final nodes to connect everything together. This also allowed me to create a background a light, camera and a render box to complete this project. Overall I was delighted to finish this project as it felt like a massive learning curve overcome after hours of trying.